Lecture 2 Goals and Types of Evaluation

March 16, 2024

Recap lecture 1

- Get to know each other

- John Snow and evidence based analysis

- Evidence based policy

- Global clean energy supply chain

- Queens curb side organics

- Capturing the wedage

- Governers Island and other cases

Program & Program Evaluation

Program:

A program is a set of resources and activities directed toward one or more common goals, typically under the direction of a single manager or management team.

Program Evaluation:

Program evaluation is the application of systematic methods to address questions about program operations and results. It may include ongoing monitoring of a program as well as one-shot studies of program processes or program impact. The approaches used are based on social science research methodologies and professional standards.

Source: Newcomer, Hatry, and Wholey (2015)

Five questions to ask

- Can the results of the evaluation influence decisions about the program?

- Can the evaluation be done in time to be useful?

- Is the program significant enough to merit evaluation?

- Is program performance viewed as problematic?

- Where is the program in its development?

Source: Newcomer, Hatry, and Wholey (2015)

Type of evaluation

Ongoing ↔︎ One-Shot

Objective ↔︎ Observers Participatory

Goal-Oriented ↔︎ “Goal-Free”

Quantitative ↔︎ Qualitative

Ex Ante ↔︎ Post Program

Problem Orientation ↔︎ Non-Problem

Source: Newcomer, Hatry, and Wholey (2015)

Types and uses of evaluation

| Evaluation Types | When to use | What it shows | Why it is useful |

|---|---|---|---|

| Formative Evaluation Evaluability Assessment Needs Assessment |

- During the development of a new program. - When an existing program being modified or is being used in a new setting or with a new population. |

- Whether the proposed program elements are likely to be needed, understood, and accepted by the population you want to reach. - The extent to which an evaluation is possible, based on the goals and objectives. |

- It allows for modification to be made to the plan before full implementation begins. - Maximizes the likelihood that the program will succeed |

| Process Evaluation Program Monitoring |

- As soon as program implementation begins. - During operation of an existing program. |

- How well the program is working - The extent to which the program is being implemented as designed - Whether the program is accessible and acceptable to its target population |

- Provides an early warning for any problems that may occur - Allows programs to monitor how well their program plans and activities are working |

| Outcome Evaluation Objectives-Based Evaluation |

- After the program has made contact with at least one person or group in the target population | - The degree to which the program is having an effect on the target population’s behaviors. | - Tells whether the program is being effective in meeting its objectives. |

| Economic Evaluation: Cost Analysis, Cost-Effectiveness Evaluation, Cost-Benefit Analysis, Cost-Utility Analysis | - At the beginning of a program - During the operation of an existing program. |

- What resources are being used in a program and their costs (direct and indirect) compared to outcome | - Provides program managers and funders a way to assess cost relative to effects. “How much bang for your buck. |

| Impact Evaluation | - During the operation of an existing program at appropriate intervals. - At the end of a program. |

- The degree to which the program meets its ultimate goal on an overall rate of STD transmission (how much has program X decreased the morbidity of an STD beyond the study population). | - Provides evidence for use in policy and funding decisions |

Source: CDC

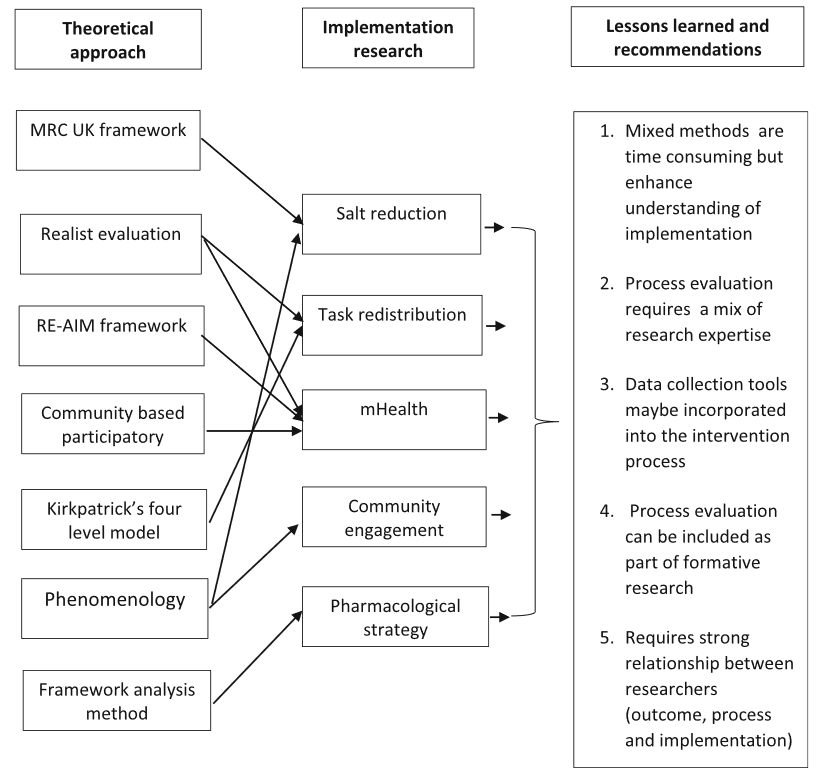

Process evaluation can be very useful

Source: Limbani et al. (2019)

Exploratory evaluation

| Approach | Purpose | Time |

|---|---|---|

| Evaluability assessment | Assess whether programs are ready for useful evaluation; get agreement on program goals and evaluation criteria; clarify the focus and intended use of further evaluation. | 1 to 6 months; 2 staff-weeks to 3 staff-months |

| Rapid feedback evaluation | Estimate program effectiveness in terms of agreed-on program goals; indicate the range of uncertainty in the estimates; produce tested designs for more definitive evaluation; clarify the focus and intended use of further evaluation. | 3 to 6 months; 3 to 12 staff-months |

| Evaluation synthesis | Synthesize findings of prior research and evaluation studies. | 1 to 4 months; 1 to 3 staff-months |

| Small-sample studies | Estimate program effectiveness in terms of agreed-on program goals; produce tested measures of program performance. | 1 week to 6 months; 1 staff-week to 12 staff-month |

Source: Newcomer, Hatry, and Wholey (2015)

Evaluability Standards: Readiness for Useful Evaluation

- Program goals are agreed on and realistic.

- Information needs are well defined.

- Evaluation data are obtainable.

- Intended users are willing and able to use evaluation information.

Source: Newcomer, Hatry, and Wholey (2015)

Selecting an exploratory evaluation approach

| Approach | When Appropriate |

|---|---|

| Evaluability assessment | Large, decentralized program; unclear evaluation criteria. |

| Rapid feedback evaluation | Agreement exists on the goals in terms of which a program is to be evaluated; need for evaluation information “right quick”; potential need for more definitive evaluation. |

| Evaluation synthesis | Need for evaluation information “right quick”; potential need for more definitive evaluation. |

| Small-sample studies | Agreement exists on the goals in terms of which a program is to be evaluated; collection of evaluation data will require sampling. |

Source: Newcomer, Hatry, and Wholey (2015)

High Line Park Story

Photo credit: Gang He

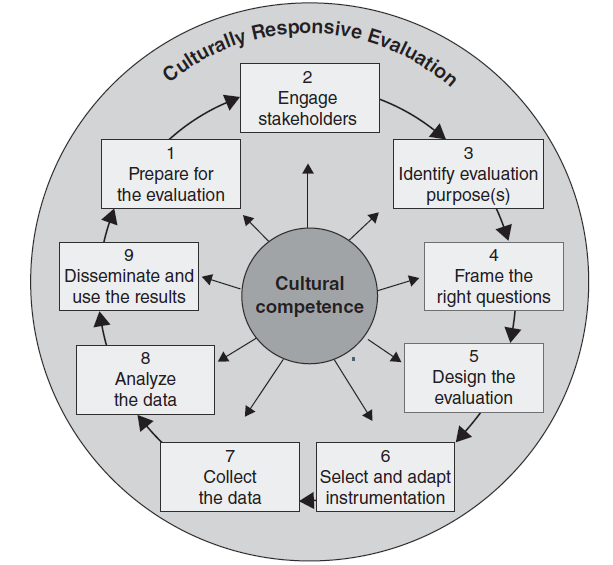

Culturally responsive evaluation

- Culture of the program that is being evaluated

- Cultural parameters

- Including equity

CRE framework

Source: Newcomer, Hatry, and Wholey (2015)

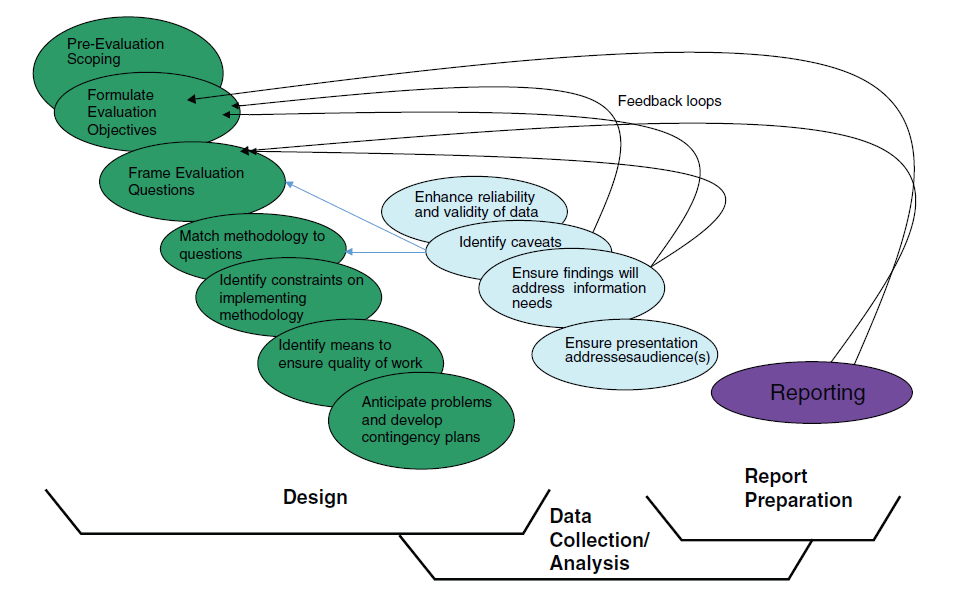

Evaluation process and feedback

Source: Newcomer, Hatry, and Wholey (2015)

GAO’s Evaluation Design Process

- clarify the study objectives;

- obtain background information on the issue and design options;

- develop and test the proposed approach;

- reach agreement on the proposed approach.

Read more: Designing Evaluations: 2012 Revision